[st_row][st_column][st_text ]With the world moving towards cloud, options like online data are becoming increasingly popular. Owing to their storage being easily accessible, and scalable. Especially for small- and medium-sized businesses. The stark increase in the cloud adoption rate in the recent years has led to a growing concern regarding cloud security solutions. Even on Amazon Web Services – one of the safest and high-performing cloud service providers. Amazon offers multiple storage and computing services. With EC2, S3 and EBS being among the widely used services in the industry. Within EC2, you have the liberty to opt for either EBS (Elastic Block Storage), or EFS (Elastic File Storage). EC2 itself is a computing service that allows you to access cloud-based servers. For example, you need EC2 to access Elastic Block Storage or Elastic File Storage. Both of the storage systems have their own benefits and limitations. However, there is a third storage system in AWS that has gained a lot of traction in the past few years. It is called Amazon S3 (Simple Storage Service). S3 uses the bucket storage system to save files. Each bucket holds an infinite amount of storage space, which is the USP of this storage system. Just like EBS and EFS, S3 also uses EC2 as a computing service. To get an idea of the storage you can have in S3 objects, we have the example of the media services provider, Netflix. The company uses S3 to store massive amounts of customer data and movies. As well as, leverage EC2’s ability to provide unprecedented access to data from various regions of the world.[/st_text][st_heading ]With Big Data Comes Big Responsibility [/st_heading][st_text ]Amazon S3 wouldn’t have been a success if it weren’t for its top-notch access security system. With colossal amounts of data of about a million customers across the globe. Access controls were a huge cloud security challenge for the largest service provider (in terms of market share). It had to make sure that when enterprises, especially from the healthcare and finance industries, store their data on S3, it stays secure. For that, Amazon launched a detailed set of access controls that users must comply with. These access controls ensure that the users’ data stored over S3 is safe from potential threats.[/st_text][st_image el_title=”AWS-S3-access-control-tools” image_file=”https://cloudnosys.com/wp-content/uploads/2019/12/AWS-S3-access-control-tools-e1575486126343.png” image_size_wrapper=”” image_size=”full” image_alt=”AWS-S3-access-control-tools” ][/st_image][st_heading ]Access Control Lists (ACLs) in Amazon S3[/st_heading][st_text ]ACL is a list of accounts, both authentic and unauthentic, that have certain access to an object. There are object ACLs and bucket ACLs. Any AWS user or Canonical ID (explained below) present in an AWS resource ACL has access to that particular resource. The extent of this access is determined with the permission that the user has. (See Figure 1 for different types of permissions)

Buckets and Objects

In Amazon S3, you create buckets where you can store different objects. These objects and buckets are the resources of Amazon. Think of it as a folder (bucket) where you put your files (objects). Since you are creating these buckets and uploading objects, you are the owner of these resources. However, the owner of the bucket, has the authority to allow other accounts to upload objects within the same bucket. For example, two users (two different accounts) can use the same bucket to upload their objects. But there are certain limitations here. To make it more comprehendible, let’s say that we have ‘USER A’ that creates a bucket on Amazon S3. Now, USER A allows another user, ‘USER B,’ to access the bucket. It means that both USER A and USER B can now upload objects in the same bucket. However, this does not allow USER A any kind of access to the objects uploaded by USER B (even though USER A is the owner of the bucket). Although the bucket owner cannot access objects uploaded by other accounts, it has the authority to delete those objects or archive them.

Authentication of ‘WRITE’ access

The owner of the bucket has the authority to keep a public bucket policy. This means that any unauthentic account (which doesn’t have AWS credentials), will be able to upload objects into the bucket. That is, if the owner gives permission or if the bucket has an active public policy. A good example here is of PUT Object requests. PUT Object authority is only available to unauthorized accounts if the owner has public policy set for the bucket. This means that an unauthorized account has the “WRITE” permission for the object. Authentic accounts in a bucket can have “WRITE” grants (provided by the bucket owner). Which means that they can upload objects in the bucket. Furthermore, if an unauthentic account initiates a PUT object request, it needs to be on the Access Control List (ACL). For an unauthentic user to place an object inside the bucket, the bucket owner can put that anonymous user on the bucket ACL or provide “WRITE” access to ‘All Users’. In such a case, that anonymous user can upload objects to the bucket. Furthermore, despite not having an AWS account, the object uploaded by that anonymous user can only be accessed by them. Since that user is the owner of the objects they upload in the bucket. Meanwhile, when an authenticated request is made to upload an object. That request is encrypted with a signature value linked to the user account it is generated with.

AWS recommendation

According to Amazon, you should not use your AWS account root user (the original AWS account) to make authenticated requests to upload objects. Instead, you should make another (IAM) account. Give full access (FULL_CONTROL) to that account. And then use that account to upload objects by initiating authenticated requests to ensure maximum AWS security.

Canonical ID and its access to objects

Unauthenticated users don’t have an AWS account. They can only request access to upload an object instance to a public bucket. Earlier, we discussed that only the owner of an object can access it. Which means that only the unauthenticated user can access its uploaded objects. This is where it gets tricky. An object needs to be linked to a user ID for ownership. Unauthenticated users don’t have an AWS ID. Then how does AWS grant access of an object uploaded by an unauthenticated user (who doesn’t have a valid AWS account) to its owner? In such a case where an unauthenticated user requests to upload an object in a public bucket. AWS attaches a long string ID – a Canonical ID (for example, 65a011a29cdf8ec533ec3d1ccaae921c) – which then becomes the ID of that unauthenticated user. The object uploaded by this user is then linked to its canonical ID, to identify the owner of that object. AWS also puts this ID into the object’s ACL.

Granting access permissions

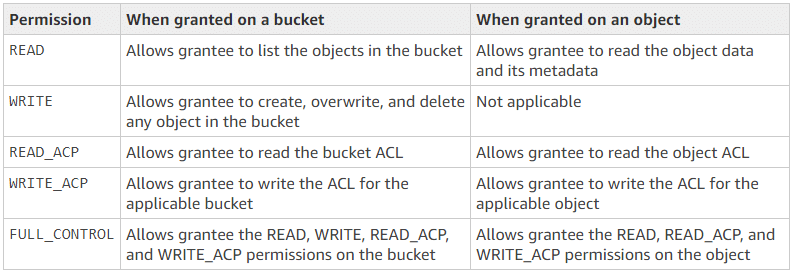

AWS contains two types of access control lists. One for the bucket and the other for the objects. While both ACLs contain the same type of permissions (see Figure 1), they grant different types of permissions to the user. For example, as shown in the first row in Figure 1, a bucket owner can provide READ access for the bucket to another AWS user. It will allow the AWS user to “list objects in the bucket.” On the other hand, if the same access (READ) is allowed for an object, the user who is granted this permission will be able to “read the object data and its metadata.”

- Resource-based policies: These are attached to an Amazon resource

- User policies: These are linked to AWS accounts and anonymous users

These policies can only be set and applied to an object or bucket by the owner of that particular resource.[/st_text][st_heading ]A Common Ambiguity When Granting Access within S3[/st_heading][st_text ]Since only the owner of a bucket or object has license to set up access and cloud security framework policies. Users are often confused about granting access to objects that other users own within a bucket. For example, you are the owner of a bucket, and you have allowed an unauthentic or anonymous user, to put an object within your bucket. Now, since you own the bucket and not the object, how will you devise policies regarding objects within the bucket? How will they even apply to an object that another user owns? It is where you need to figure out whether you need a resource-based policy or a user-based policy. Also, can there be a combination of resource-based policies and user-based policies to grant permissions within Amazon S3? Solving the common questions There are different scenarios where you can choose to use a bucket ACL, an object ACL, or grant access to a particular user account. The following are some of these scenarios regarding access permissions:

- As a bucket owner, you can create a bucket policy to manage permissions for any object that you own yourself.

- If another user has placed an object in your bucket, that user can write policies and grant permissions related to that object.

- A user that doesn’t own a certain object can only gain access to it, if the object owner puts that user in the object ACL. An object ACL can allow 100 grants at a maximum.

- If you are a bucket owner and want to give another AWS account a particular type of access (READ-ACP access, for instance) to your objects. You can do that through a bucket policy. However, if the policies differ on the object-level, using a bucket policy to manage control may not be a good idea. Because it would be quite difficult with a lot of limitations.

- You can provide certain access permissions to other AWS accounts via your bucket policy. (Refer to figure 1.1 above to see the permissions you can grant to an object/bucket ACL)

- You (parent account) can create IAM users, and attach a unique user-policy with each user to access an object. However, that user must have permission from both its parent account and the owner of the object it wants to access. For instance, you, as the bucket owner, will create a user and allow that user to access an object (owned by another account). In that case, the user will not only have to take permission from the owner of the bucket (you), but also from the owner of the object it is trying to access.

AWS Cloud Security Tips for Access Controls

Dealing with permissions on Amazon S3 requires a lot of care, to make sure that no unauthorized personnel is able to access Amazon resources. The following are a few security tips to bear in mind, in order to keep your S3 bucket safe. Cloudnosys bucket Health Check can perform all of the rules and many more as noted below: Use correct policies It is important to issue bucket and object-related policies so that the general public cannot access critical data. You can limit public access to Amazon resources by setting up the necessary controls. Beware of wildcard identities Any predetermined policy that allows wildcard identity means, that anyone from the general public can access your Amazon resources, or perform an action related to it. Monitor your ACLs Being in an ACL allows both authenticated and unauthenticated users certain access to your resources. Recheck your ACLs and make sure that every user is granted with needful access only. For example, you might want an unauthentic user in an object ACL to have READ access only. Double-check ACLs to ensure that that particular user is not given WRITE or FULL-ACCESS. IAM roles for EC2 applications Applications running on EC2 require AWS credentials. However, that does not mean that you should store those credentials on EC2 since it can lead to serious repercussions in case the credentials are compromised. A way around this problem is to use IAM Roles. They allow you to use temporary credentials without having to give keys to access an EC2 instance. Multi-factor authentication Objects in a bucket can be of great value, and you wouldn’t want to lose them at any cost. However, if anyone gains access to the owner’s credentials or a certain privileged IAM user, that person would be able to delete the object. To prevent that, it is advised to enable MFA, which adds another layer of authentication to take such a crucial action. Amazon S3 resources are gaining traction, especially in industries where large amounts of data need to be stored and swiftly accessed by multiple users in different regions. Amazon offers ‘AWS Trusted Advisor’ to ensure the proper application of S3. However, Cloudnosys provides you with more personalized insights into your Amazon S3 implementation with S3 health checks, the reliability of the cloud security solution, and best practices according to your business’s IT infrastructure.[/st_text][st_heading ]Challenges Faced by Businesses in Cloud Security[/st_heading][st_text ]For an individual person managing their own data, monitoring access control can be easy. But when it comes to businesses, they have large data sets to manage. A running business has their data increasing at a great pace due to business operations. Small, as well as big operations result in multiplication of it. Many businesses planning to expand have a greater difficulty keeping track of all of their resources. Scaling can be quite a difficult task if you try it out on your own. Let’s talk about major challenges faced by most businesses as well as owners and managers of big data.

Data Sprawl

When you are running an organization through cloud, your data is usually divided into way too many buckets. It is spread all over the place. On top of that, there is more data being generated, most probably that data is scattered too. This makes management and control very tough. There are way too many people to manage access for. And there’s a huge amount of data to sort and manage ACLs of. It is hard to micromanage access control of hundreds of users, to thousands of datasets. What you need here is automation. Software that can take care of repetitive operations such as management and sorting of data. Taking care of and checking who has access to it. Detecting any suspicious activity and so on. Cloudnosys provides the exact solution you need. It can scan your entire system no matter how much of a data you have. Regardless of how scattered it is.

Visibility

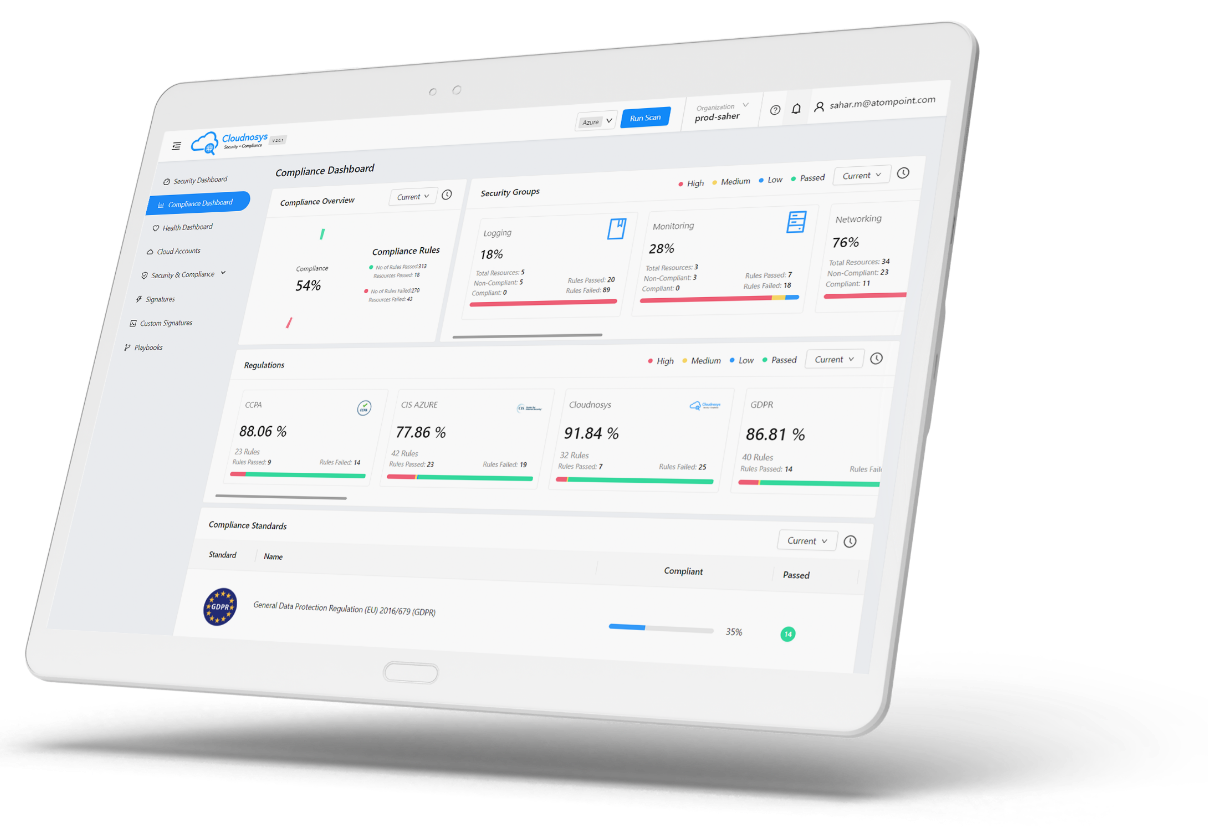

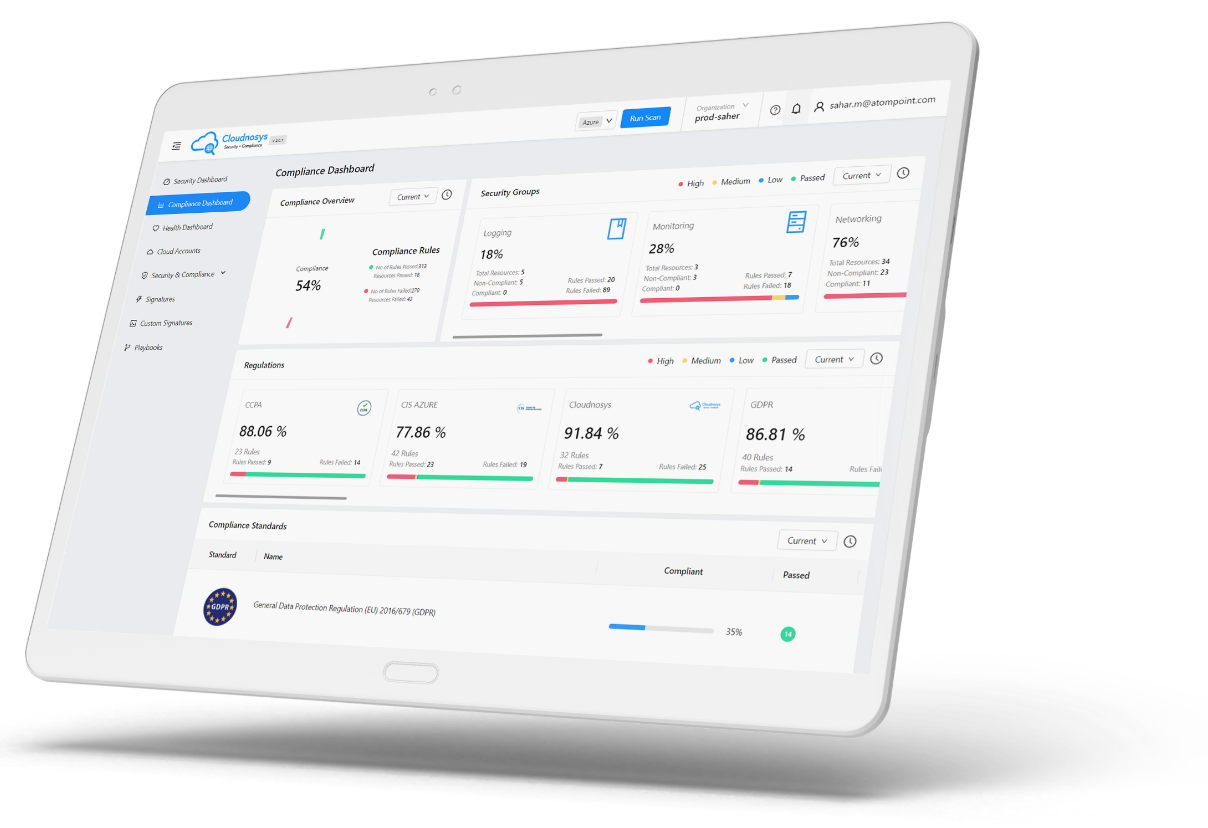

This challenge is a byproduct of Data Sprawl. Having data spread all over the place, stored in multiple buckets, and so many other places, can be hard to keep track on. This means much of your data is not visible to you. You can’t view it all due to many reasons. Lack of time and energy being one of them, as it requires a hectic job of going through so many buckets and long lists of objects. To increase visibility and have a holistic view of it, you will need an automated service to do the searching and analyzing for you. What you need is concise and comprehensive reports on your cloud infrastructure, to ensure there are no security threats. You need existing problems to be highlighted without you having to go through and thoroughly check GBs and GBs of data. Cloudnosys has you covered in this one too. As it provides automated service that checks all your systems. Detects any possible threats and vulnerabilities. And highlights them in simple as well as visual ways. You get an overview of your entire systems and data in one place. Which gives a bird eye view of your business and operations. This makes decision making easier for you. It frees you up from a great deal of basic, mundane and overwhelmingly repetitive task of scanning your data.

Governance

Governance is another challenge caused by decreased visibility and data sprawl. How can you manage data that’s not easily visible to you? That’s an obvious reason it can be a challenge. But even if it were visible to you, if there’s a lot of sprawl, managing and governing gets either difficult or impossible. Managing an extensive amount of ACLS, and user permissions, checking bucket policies, it all gets hard when your data is spread so much. It requires micromanaging to be able to govern it. Use of automation can solve this problem too. With Cloudnosys, you don’t just gain visibility over your entire system, threats and vulnerabilities included. You get solutions to the problems highlighted as well. Cloudnosys offers it’s CloudEye that detects threats and vulnerabilities. Additionally it suggests how to solve them. Thereby, improving your infrastructure’s strength, and making it easier for you to understand everything.

Compliance

There are rules, regulations and laws established internationally for the sole purpose of cyber security. Following those rules is essential, not only to avoid penalty, but to ensure your own cloud security as well. The problem here is that there are too many of these rules to read and keep track of. Many might even be irrelevant to your business but you have to go through them anyway, in order to find the relevant ones. They are written in quite a technical terms. It is nearly impossible for laymen to understand these. Reading and understanding aren’t the only challenges here. You have to go through your entire infrastructure and make sure your systems are compliant to the tiniest bits. This is a really tough job to do. There are possible errors in it, and efficiency can be compromised if assigned to human resource. Better option here is automation. Cloudnosys solves this problem as well. It offers a software that has all the rules fed into it. It scans your systems and checks alignment with rules. And then reports back with all the areas that need attention. This software updates itself as well. Which means you don’t have to worry about looking out for new rules either.